We shipped 4 new integrations in 1 day with AI (and you can too)

Learn how RunReveal uses AI agents into their SDLC to automate integration creation, building 4 new ones in one day with minimal human effort.

Many lifetimes have been spent by software engineers building new log parsers and ETL processes for SaaS products. Will we ever get to a point where human toil isn't required for these simple problems?

RunReveal has started using a development process where AI Agents can easily work alongside our engineers and do this work for us. We've seen a huge increase in velocity using this process and we figured we should blog about it because…it's really really easy to set up (we promise).

We've long told our customers that we'll support any log integration they need because we figured at some point robots would do it for us! On Friday we added support for:

- Cloudflare Gateway Network logs

- Cloudflare Gateway Access logs

- BitWarden logs

- Sophos logs

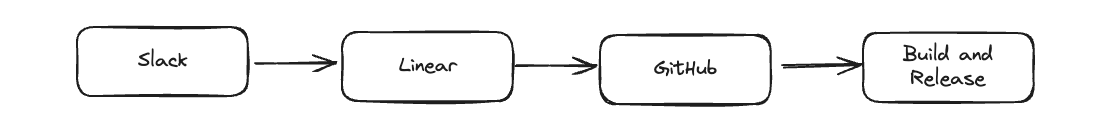

A Standard Software Development Lifecycle

All SDLCs are pretty similar; regardless of the complexities or nuances, they essentially boil down to a cyclical pattern of planning, building, testing, and finally repeating the process. Some methodologies put more emphasis on certain phases, but this simple process is more or less the way we build and maintain software.

Along with the process there's a technology and tooling aspects that helps us with each stage of this.

- Ticketing systems: We use Linear to track customer issues, bug reports, feature requests, etc.

- Code repositories: We commit our code and perform reviews in GitHub.

- CI/CD: We run tests and do our builds in GitHub actions. Since it's integrated with GitHub we use GitHub actions.

- Collaboration software: I think it's worth calling it out, but our customers report bugs to us and submit feature requests directly in shared Slack channels.

Our process, along with the technology, ends up looking something more akin to this:

Without doing anything special ourselves, we realized that we can wire up all of these tools end to end so that we have a fully AI coding assistant who can seamlessly help work on customer issues and features.

Our AI Software Development Process

The crux of what we discovered was that all of the tools we're using integrate together extremely well. For us to enable an AI coding agent to work alongside us, that interface needs to be tie in closely with whatever process we were already using.

Below is a breakdown of our process.

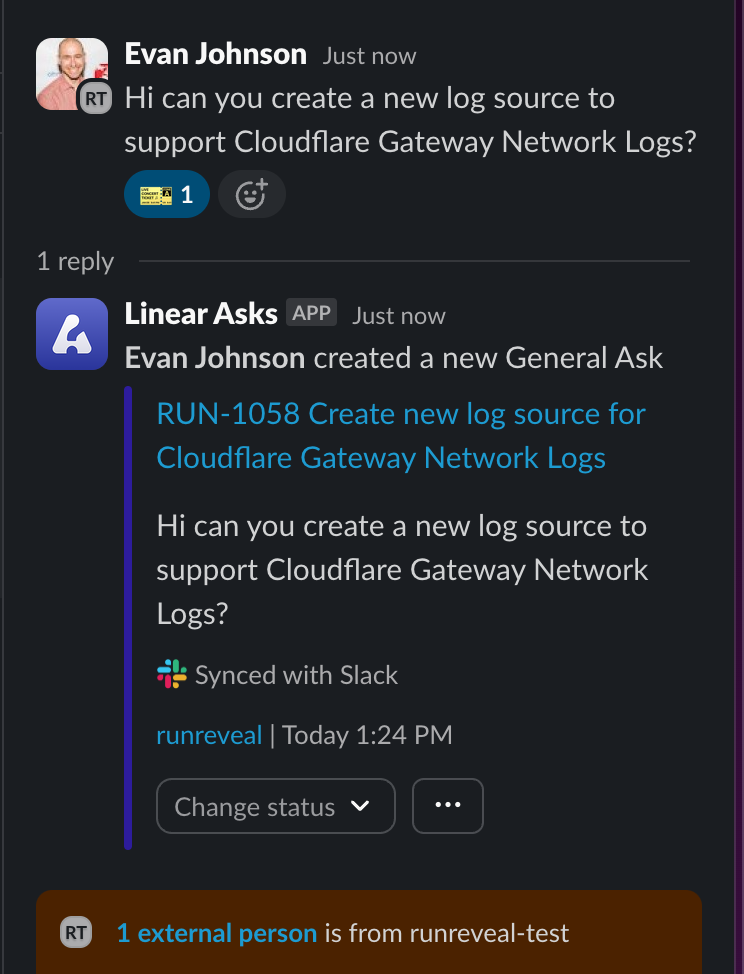

Integrate Slack with Linear using Linear Asks

The Linear Asks tool is really helpful for companies that work closely with their customers in shared Slack channels. When a customer notices a bug they can inform us about it in our shared slack and we use the 🎫 emoji to automatically create a Linear ticket.

Those requests are put into a triage queue that we can accept, add additional context, set due dates, etc. It also contains all of the context and back-and-forth from the Slack thread which is very useful.

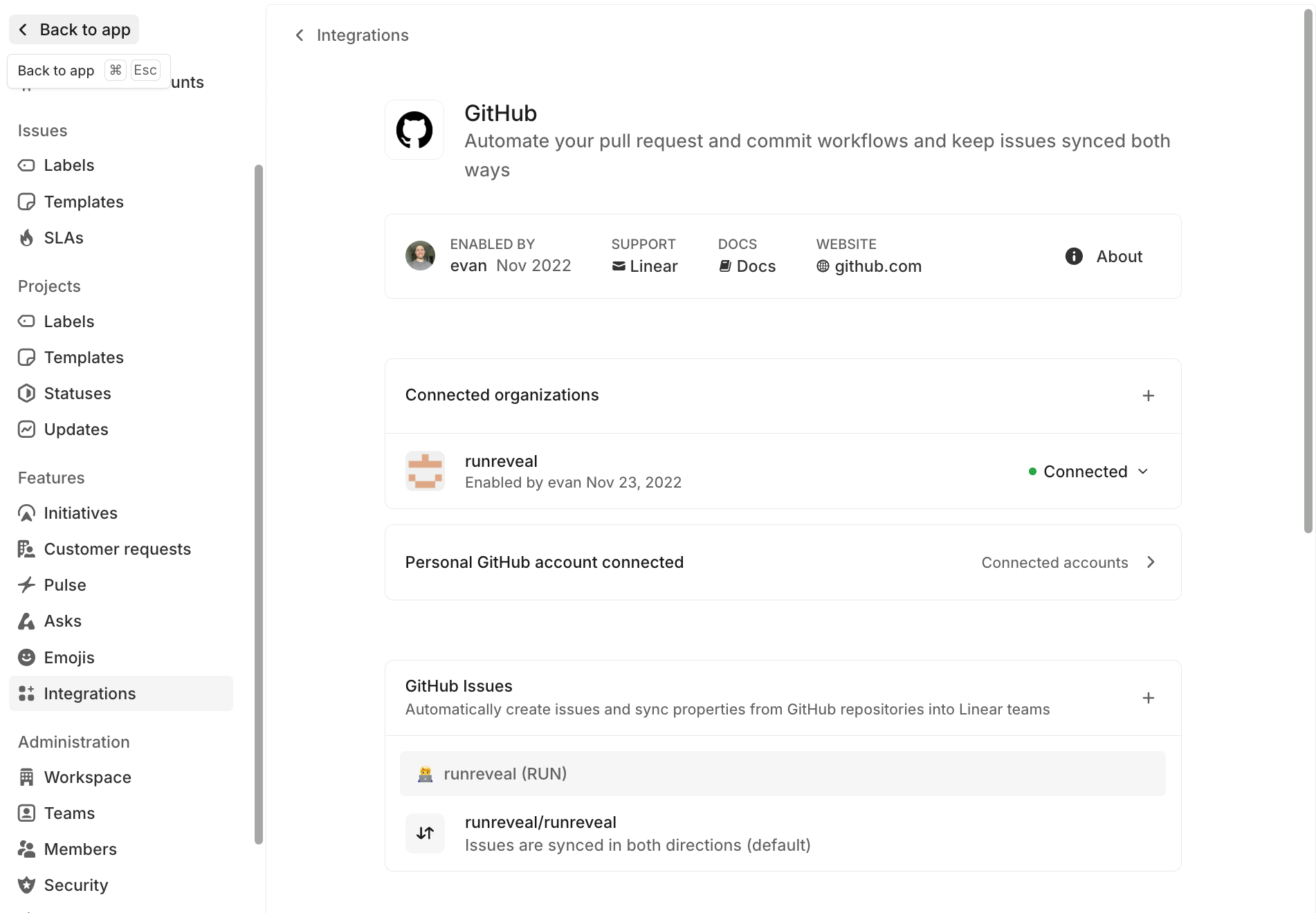

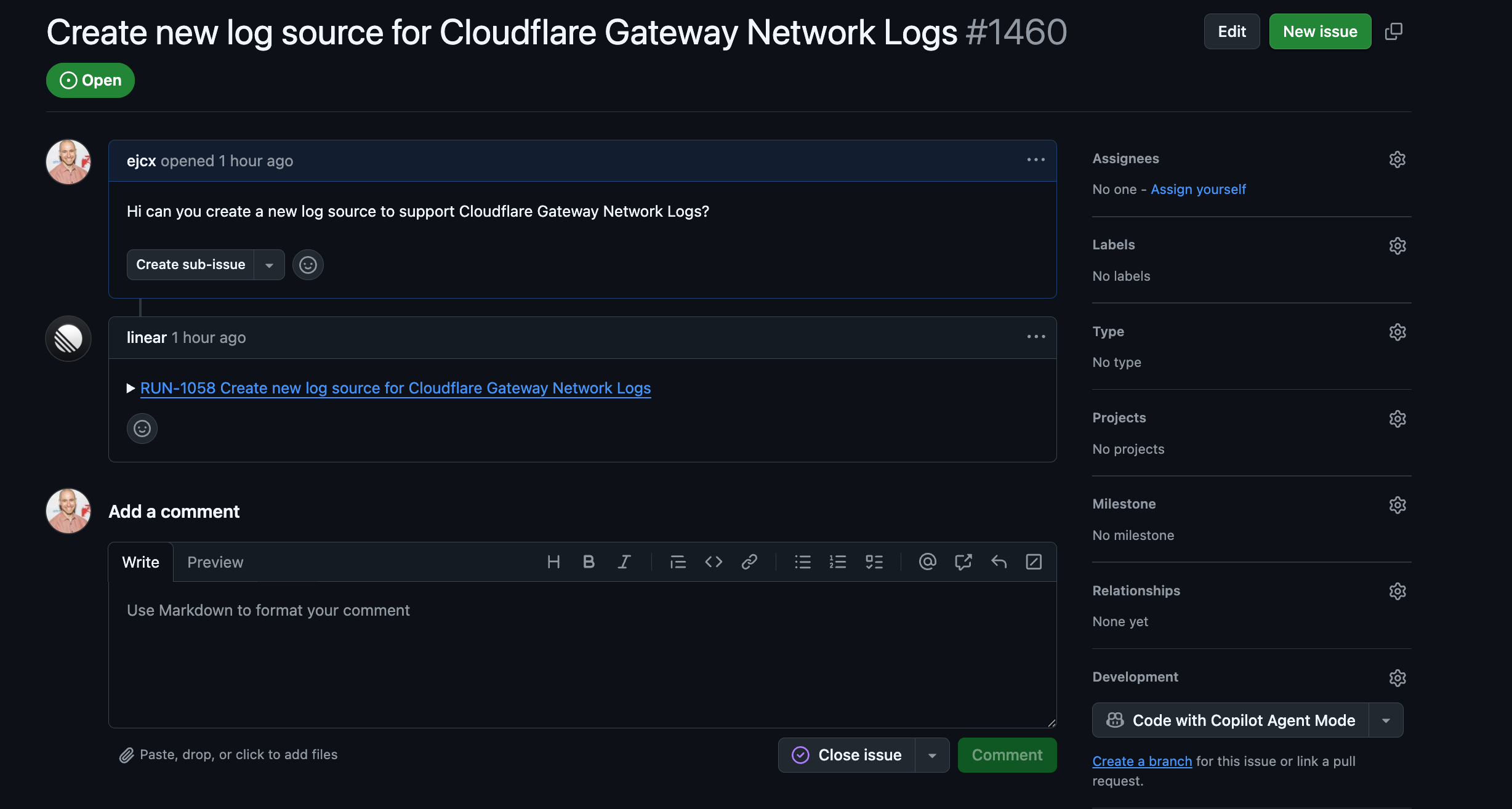

Two-way sync between Linear and GitHub Issues

Once the Linear issue is created and accepted, the ticket is automatically provisioned to GitHub. We enabled this in Linear with two-way sync between GitHub and Linear, and we provision all tickets to our monorepo.

This two-way integration allows us to keep tickets in sync between GitHub and Linear without needing to worry about closing them in two places, keeping context up to date in two places, etc.

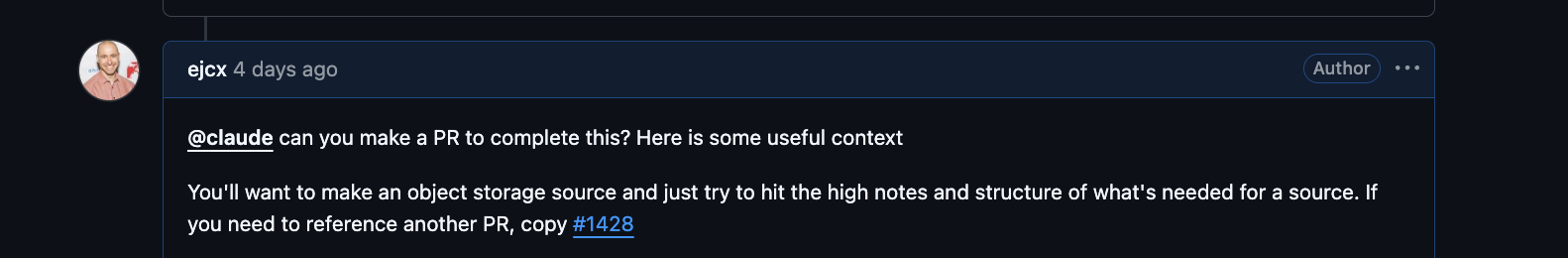

Claude Code as a GitHub Action

Finally, Claude Code running as a GitHub Action in our monorepo allows us to invoke an AI developer directly from the GitHub issue. While the above screenshots are examples to show the syncing, below is how I prompted Claude Code to build one of our new integrations. I also copy and pasted the steps required to make a source, which we had documented in an internal document as a giant wall of text, and included the format of the logs from Cloudflare's docs (which I omitted in the screenshot).

It worked perfectly the first time, cost about $4, and took about 15 minutes to run.

After creating the pull request I changed a few minor things, like snake_case to camelCase (and added this preference to my CLAUDE.md), I changed the source name to accurately reflect how Cloudflare refers to the source, and a few other minor tweaks.

After that it was ready for prod, we merged it, and it worked great!

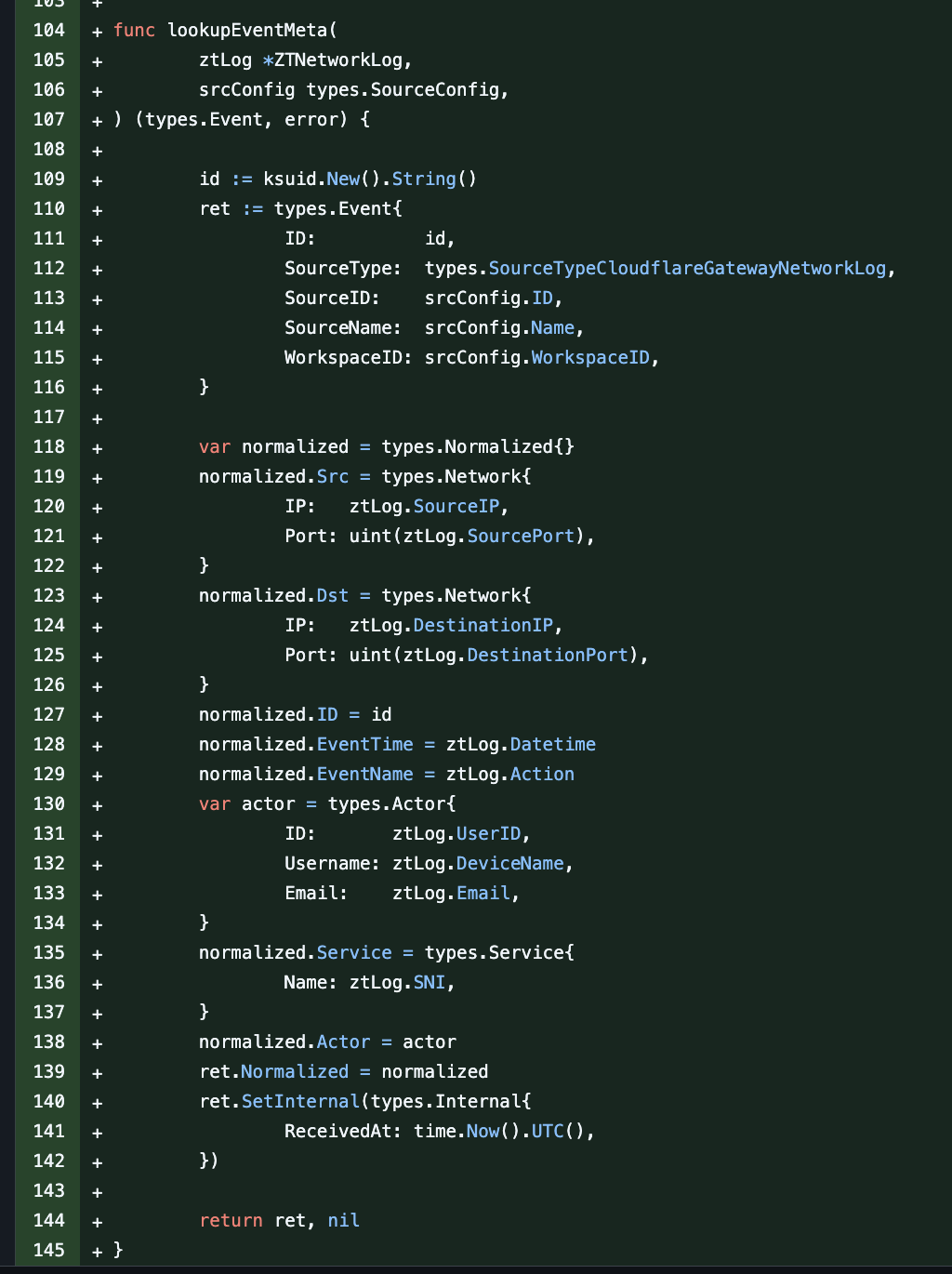

What's in an integration?

Precisely what did Claude Code build for us? One of the reasons it's so easy to offload this task with AI is that we've structured the task to be as simple as possible.

- We take log samples and our testing ensures that those log samples parse correctly.

- Ingest methods (Object Storage versus Webhook versus API Polling) is abstracted away completely.

- UI work is almost completely abstracted away into a singular component across all sources.

The bulk of what we at RunReveal do when we make a new source is:

- Parse the log into a Go struct.

- Normalize the log (pull out Actor Emails, IP addresses, etc).

- Save the original log as

rawLog(ajson.RawMessage) for future flexible usage. - A bunch of boilerplate we haven't yet abstracted away.

The AI can do these tasks in spades. The boilerplate, given a task list—Claude Code and other LLMs are very well suited for. And the crux of the Go code it generates is this function here, lookupEventMeta , which is also fairly straightforward.

Thoughts

We generated four new integrations on Friday (and one on Saturday). It cost us next to nothing, and all four worked on the first try with only minor changes being necessary for them to be production-ready.

The reason this process works so successfully for us is:

- We have consistent coding and SDLC practices.

- We use a monorepo that is well-tested and simple to understand.

- The internal process for this type of work is completely well-known and documented.

- The technologies we use to support this process integrate together seamlessly.

Solving a business goal with an LLM is something that comes down to people, process, and technology. Fitting in an LLM neatly into an existing process can be an amazing unlock and in this case we no longer need humans to always be working on our log sources.