Introducing Detection as Code Beta Support in RunReveal

Today we're announcing that RunReveal fully supports detection as code and it's available in beta for all RunReveal customers immediately. RunReveal's detection as code implementation is built as a first-class feature of our product because Detection as Code is an important step towards maturing every security engineering stack.

If there's one thing that the RunReveal team has learned about coding – sometimes the tiniest implementation details can make a product or software tool feel either magical or half-baked. Problems and rough edges in code are amplified which is why developer tooling and experience is so important and challenging.

These days configuration as code, infrastructure as code, detection as code, etc is the expectation across all of software development and never the main selling point. But these tools are full of sharp edges and poor usability. In this blog we'll show you how our Detection as Code features work and the developer pain-points we attempted to avoid.

Bootstrapping your detection repo

Most folks these days are going to want to fill a git repo with their detections and work on them with the editor of their choosing, but how do you bootstrap that?

If you've used a tool like terraform you quickly realize that cloud resources have a varied lifecycles. Some resources being made in the UI and some via configuration as code tooling. Resolving differences in state or reconciling these differences in lifecycle can quickly be problematic and frustrating.

In RunReveal we made this incredibly simple to bootstrap your entire detection repository at any time. The detection as code experience starts with our main CLI. The runreveal CLI supports searching your logs from the command line and other features, but it drives the detection as code features too.

If you already have a RunReveal account, logs, and detections then getting started is as simple as logging in:

$ brew tap runreveal/runreveal

$ brew install runreveal

$ runreveal init

$ runreveal detections export -d ~/my-detection-repo

This simple command will handle the edge cases that arise when writing a bunch of files to a directory, like naming collisions, and more. Additionally this command was designed so that if you are managing your detections with detection as code, and create a new detections using the UI, you can easily import new detections into your repo in whatever format you're using.

$ runreveal detections export -d <directory> -f <json/yaml> -n <detection name>

I just ran this in our RunReveal, Inc. instance of RunReveal and the aftermath is an organized directory structure by log source type but it can be optionally flattened with the --flat flag.

:) ls detections/okta

disabled-okta-user-access.sql

disabled-okta-user-access.yaml

okta-anomalous-third-party.sql

okta-anomalous-third-party.yaml

okta-brute-force.sql

okta-brute-force.yaml

okta-threat-intelligence.sql

okta-threat-intelligence.yaml

okta-unauthorized-access.sql

okta-unauthorized-access.yaml

okta-user-session-impersonation.sql

okta-user-session-impersonation.yaml

okta-user-suspicious-report.sql

okta-user-suspicious-report.yaml

user-access-new-country.sql

user-access-new-country.yaml

Our goal is that adopting detection as code shouldn't take more than 10 or 15 minutes to figure out, and the least we can do is prevent you from needing to worry about importing your initial state, weird naming collisions, managing complicated state files, and more.

Developing new detections

Once you want to make your own detection, writing the detection is just a small part of the experience. When you create a new detection you probably don't want to spend 10 minutes struggling with a yaml format that you can't remember. Because of this we made a create subcommand.

:) runreveal detections create

Detection name: dns_changes_via_api

Description: Get alerted whenever a DNS change is made in the API.

Categories (comma separated): cf, signal

Severity: Low

Risk Score (number): 10

ATT&CK classification:

Enabled: true

Query Type: sql

Detection Created:

dns_changes_via_api.yaml

dns_changes_via_api.sqlThe output of this will be a yaml file (or a JSON file if that's more your style), and your detection code file. You don't need to use the wizard, but it can be helpful tool so you don't forget yaml fields and start with a known good format.

Since we're making an example detection, let's look for all entries in our Cloudflare Audit Logs showing DNS changes made by the Cloudflare API. This is a detection I made using the RunReveal search interface.

$ cat dns_changes_via_api.sql

SELECT *

FROM cf_audit_logs

WHERE (receivedAt >= {from:DateTime}) AND (receivedAt < {to:DateTime})

AND (( eventName = 'create' AND interface = 'API' AND actorType != 'system' ))You can see that now that we have the detection written, we probably want some idea about how noisy it is before we put it into production and find out the hard way. The test command is a special command specifically for testing the detection query. By default, the test command will look back 1 hour but I'm more curious in this case over a longer time-period. Let's see how many DNS changes via the API we've made over the past month.

$ runreveal detections test --file dns-changes-via-api.yaml --from now-30d

2024/06/18 15:49:26 Returned results for detection: 2 RowsOnce you're satisfied that you want to put the detection into production, you're ready to push your code!

Pushing the code

At RunReveal we use GitHub for source control. We created a GitHub action to make pushing your detections to RunReveal from continuous integration easy. Under the hood our GitHub action is just using our CLI to run the following command, so our product is easy to integrate with any CI/CD system.

runreveal detections sync -d $INPUT_DIRECTORYEveryone hates when the build passes but the deploy fails. Because of this, internally we have one action with two separate workflows on our detection repository. One for testing the build and one for deploying any changes after a merge.

The first workflow you'll notice is performed when pull-requests are created, and dry-run is set to true. This way when a PR is opened we can ensure that there are no issues with the configuration, and we can see what detections will be modified, created, or deleted prior to merging.

name: Test Detections

on:

pull_request:

branches: [ main ]

workflow_dispatch:

jobs:

build:

runs-on: ubuntu-latest

name: Sync RunReveal Detections

steps:

- uses: actions/checkout@v4

- name: sync-runreveal-detections

uses: runreveal/detection-sync-action@v2

with:

directory: ./detections

token: ${{ secrets.RUNREVEAL_TOKEN }}

workspace: ${{ secrets.RUNREVEAL_WORKSPACE }}

dry-run: trueThe next workflow we've specified is for once our pull-requests are merged, the state from our repository is automatically pushed to the RunReveal API. In this case dry-run is no longer specified.

name: Sync

on:

push:

branches: [ "main" ]

workflow_dispatch:

jobs:

build:

runs-on: ubuntu-latest

name: Sync RunReveal Detections

steps:

- uses: actions/checkout@v4

- name: sync-runreveal-detections

uses: runreveal/detection-sync-action@v1

with:

directory: ./detections

token: ${{ secrets.RUNREVEAL_TOKEN }}

workspace: ${{ secrets.RUNREVEAL_WORKSPACE }}The dry-run will tell us exactly what detections have been created, deleted, or modified, and ensure that the structure of the files contains all the necessary information to successfully run the detections in production.

By utilizing our CLI, there's no CI/CD environments that we can't push from but today we've already set up the tooling and workflows required to make our customers using GitHub successful, and minimize the amount of time they need to do spend doing yaml-engineering.

Authentication and Security

We wanted to be cognizant of the fact that our main CLI is usable on our customer's laptops for searching logs and administrator activity, and used by CI/CD for pushing state and detections to our API. Two very different use cases.

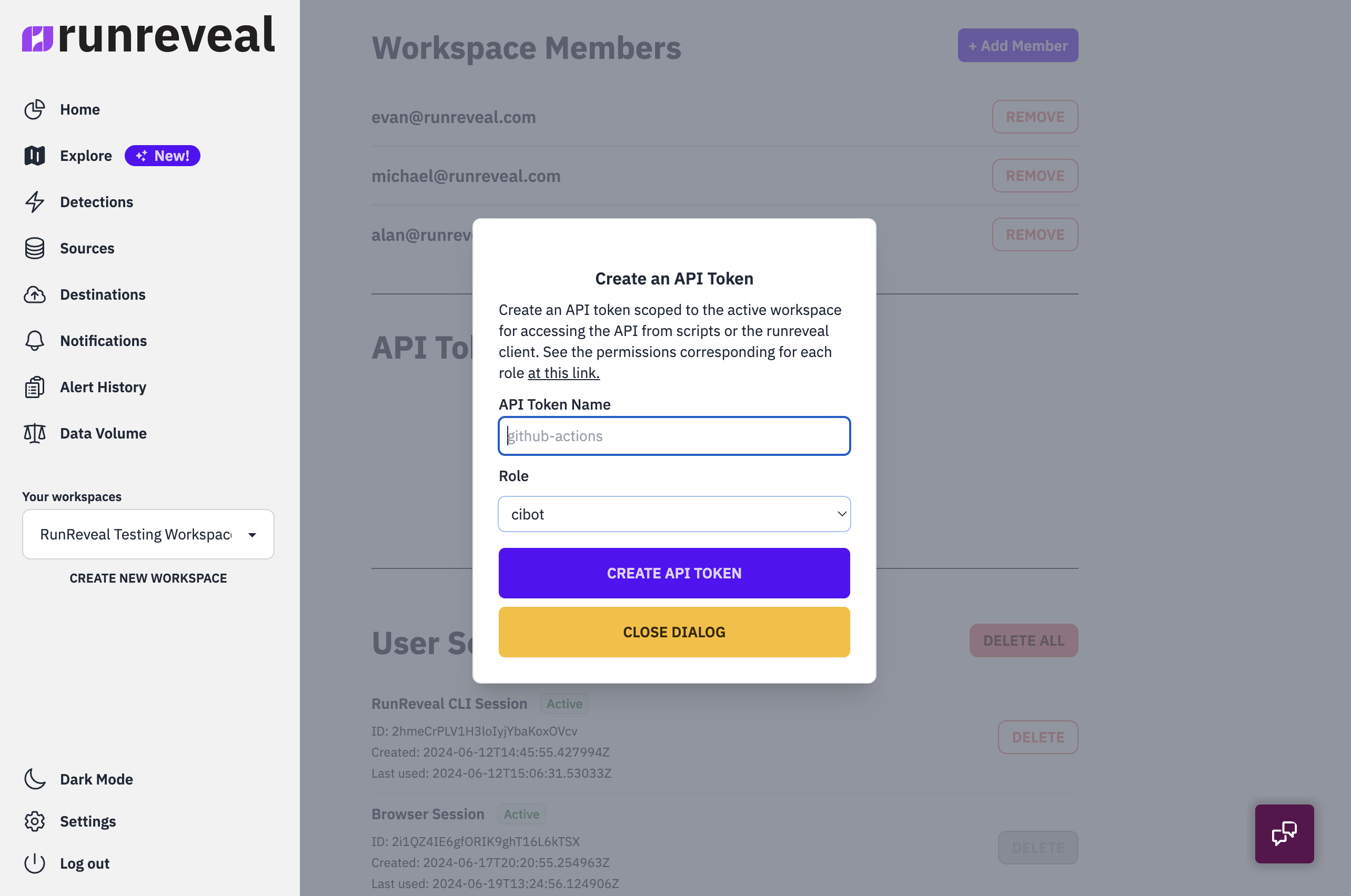

CI/CD systems are notorious for having oodles of admin scoped tokens and we didn't want our customers to be exposed to this kind of risk when using RunReveal. Detection as code supports our RBAC permissions system and we provide a role called cibot that has the least privilege necessary to export, edit, and write detections.

This is easy to configure when creating a new API Token in the UI, just select cibot as the role when creating the token.

We plan to provide our customers with the functionality to make their own custom roles, and our RBAC system supports this kind of use case, but we haven't exposed this kind of functionality (yet).

What's next?

Detection as code is a basic need for security teams in 2024, not a key selling point, but the experience needs to support the workflows that engineers go through. We plan to continue polishing this product and move it to general availability later in this year once the experience is up to our liking and we shave down any rough edges.

We have some of our biggest announcements planned for the next few weeks and we're working on launching them. We're planning to open-source some new technology and release some innovative products that have the opportunity to change the way we all approach detection and log management.

If you'd like a sneak preview drop us your email or schedule a meeting with us and we don't mind showing you all of the details prior to launching them.

RunReveal is here to help security teams handle their security data. Security teams are overwhelmed with noise, burdened with dozens of different data formats, and struggling to afford their detection stack.

With RunReveal the approach is different. We've built a simple tool for collecting, detecting, and making use of your security data without all of the noise or frills you don't need.