How We Built the RunReveal MCP Server

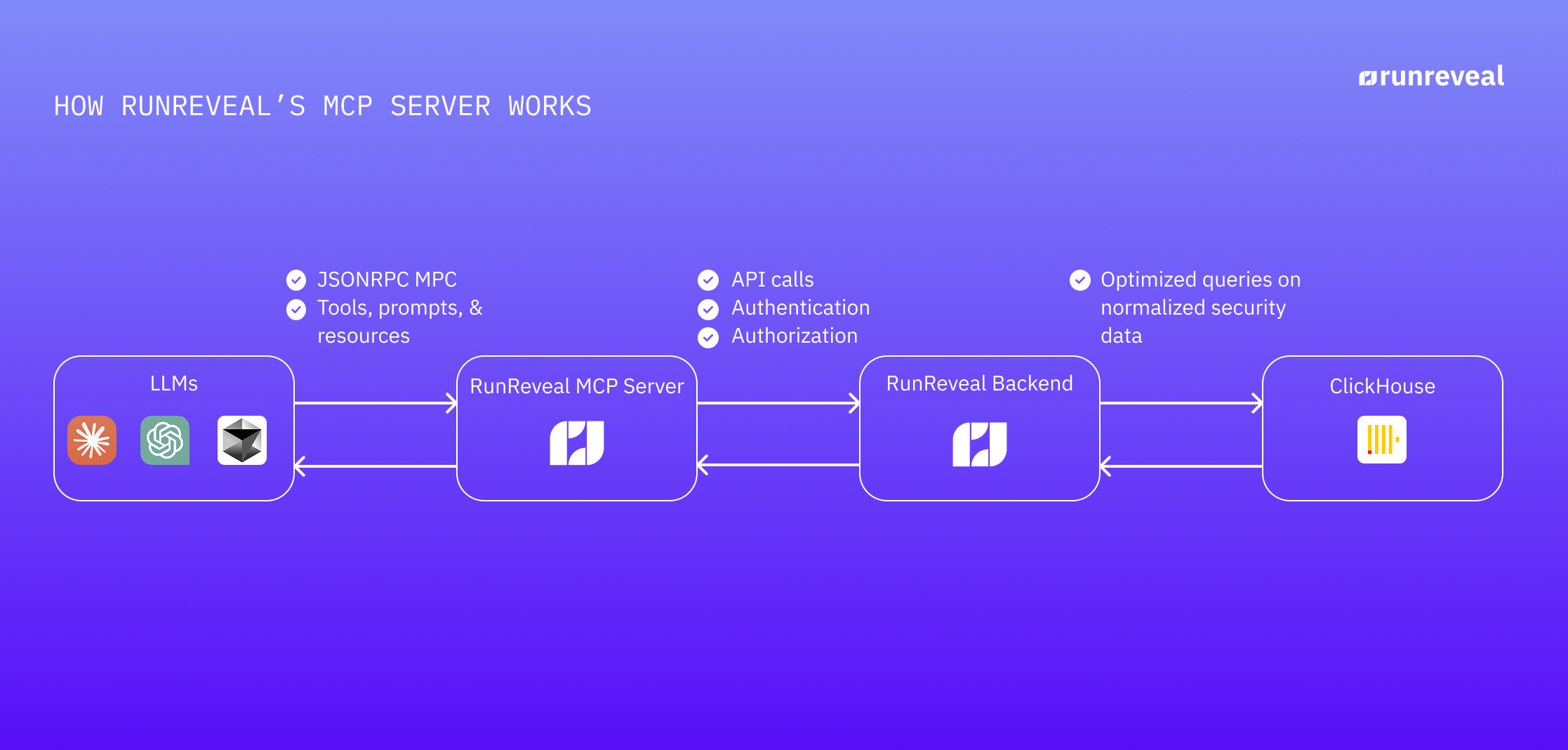

Learn how RunReveal built a custom MCP server while leveraging ClickHouse for faster security investigations and detection management.

The current wave of Artificial Intelligence is fundamentally changing how we interact with computers and data. As large language models (LLMs) become more capable, the value of applications is increasingly shifting toward data modeling and workflow orchestration rather than specific UIs or APIs that end users directly interact with.

We're entering an era where application interfaces must be multi-modal — serving humans through traditional UIs, programs through APIs, and AI agents through protocols like Model Context Protocol (MCP). The most valuable software will be that which can effortlessly bridge these different interaction modes, providing consistent access to underlying data and workflows regardless of who or what is accessing them.

Why Build Our Own MCP Server?

We looked at a number of SDKs for implementing MCP but ultimately were left wanting more out of them. We believe the future of application interfaces will be multi-modal:

- UIs exist for viewing and reviewing data, helping humans understand system state

- APIs will continue to serve "permanent" programmatic interactions

- MCP will enable transient resource exposure in contexts like LLM sessions

Our goal was to create a unified data model that can be accessed through multiple interfaces — whether by humans, traditional programs, or generative AI agents. By writing our own RPC implementation using generics and code generation, we're able to easily support generating JavaScript clients, Go clients, and the MCP Server since they all use the same types and API endpoints.

This approach has the added benefit of simplified code maintenance — we don't have to maintain separate handlers for each modality since they all leverage the same underlying implementation.

No MCP SDKs that we've seen have been built with this multi-modality in mind, and instead introduce more code to be maintained in addition to preexisting APIs.

The Critical Role of ClickHouse in Our MCP Implementation

A key factor in the effectiveness of our MCP server is our use of ClickHouse as our database foundation. This decision dramatically enhances security investigations in several ways compared to AI SOC tools that rely on external SIEMs:

- Unparalleled performance: An optimized database schema on ClickHouse delivers speed that outperforms any existing SIEM on the market today. We're reducing queries from minutes to seconds or even milliseconds, enabling LLM-driven investigations to be conducted much more quickly and efficiently.

- Flexible trust boundaries: Our deployment model allows customers to keep their data within their trust domain. If desired, they can deploy ClickHouse, RunReveal, and their LLM provider in the same VPC, ensuring sensitive data never leaves their security perimeter.

- Schema optimized for AI analysis: ClickHouse's schema supports strong typing, clear indexes indicating optimal query patterns, and column comments describing the purpose of each field. Combined with views built on top of raw data, this creates an environment where LLMs can perform autonomous analysis with capabilities unmatched by other SIEMs.

These advantages made it imperative that we build our MCP server in-house. We needed to ensure that our role-based access control to data is strictly enforced while maintaining optimal performance. These requirements simply wouldn't have been possible with an off-the-shelf MCP implementation.

Our Implementation Approach

Our approach focuses on simplicity and leveraging our existing API infrastructure. At its core, MCP is just JSON RPC with a few required routes — simpler than many make it out to be.

Because it's so simple, we decided to implement it at the "line protocol" level; defining the raw JSON RPC request and response objects and using those directly instead of bringing in a new dependency.

Here's how that looks in practice.

First We Define Functions with Standard Signatures

We start by defining functions with a consistent signature:

type RPCMethod func(ctx context.Context, rq any) (rp any, err error)

func RPC[Rq, Rp any](

callme RPCMethod,

opts ...RPCOption,

) http.Handler {

// Serialization

// Validation

// Calling the registered method

// Error Handling

}Here's an example of a concrete method that implements this pattern:

func (s *Server) LogsQuery(ctx context.Context, req types.LogsQueryRequest) (*types.LogsQueryResponse, error) {

// Implementation details

}Then We Register the Handler with the Mux Server Router

We register these methods with our HTTP server using Gorilla Mux. This function now serves as both an MCP tool call, and an API route that can be used by our UI and other clients, handling authentication and routing all in one. 💥

r := mux.NewRouter()

// ...

r.Handle(types.RouteLogsQuery,

s.AuthWksp(rpc.RPC(s.LogsQuery, rpc.WithTag("mcp", "true")), PermQueriesRead),

).Methods("POST", "OPTIONS").Name("run_query")Generating the MCP Server Tool Calls

MCP has a concept of Prompts, Resources and Tools. Prompts help define workflows in the LLM from the application and allow external applications to help kick-start or enhance workflows. Resources provide the model with Schemas or Data that are used as context for the problem at hand, and tools are remote functions that can be called on to perform some action. The following is how we generate tool calls.

We define a data structure to hold information about each RPC route:

// RPCToolData contains info for a single RPC route

type RPCToolData struct {

RoutePath string

RouteMethod string

ToolName string

MethodName string

RequestType string

ResponseType string

RequestObject any // Actual request object type for schema generation

SchemaJSON string // JSON schema as a string

}The magic happens when we walk the route tree to find handlers that implement our RPC interface, preparing the necessary objects to be passed into our code generation template below.

func collectRPCTools(r *mux.Router, verbose bool) []RPCToolData {

var rpcTools []RPCToolData

err := r.Walk(func(route *mux.Route, router *mux.Router, ancestors []*mux.Route) error {

// Extract path, methods, and handler info

pathTemplate, err := route.GetPathTemplate()

// ...

// Look for handlers with MCP tags

if _, ok := tpl.Tags["mcp"]; !ok {

continue

}

// Generate JSON schema for the request object

schema, err := generateJSONSchema(tpl.RequestObject)

// ...

// Add to our tools collection

rpcTools = append(rpcTools, RPCToolData{

RoutePath: pathTemplate,

RouteMethod: method,

ToolName: toolName,

MethodName: methodname,

RequestType: tpl.RequestType,

ResponseType: tpl.ResponseType,

SchemaJSON: schema,

})

return nil

})

return rpcTools

}Finally, Generate the MCP Code from Templates

Using a Go template, we're able to generate a lot of tools and routes efficiently by walking through each method and creating the necessary tool calls in the server, passing in the context representing each tools capability as well as schemas for requests and responses.

func generateMCPHandlers(w io.Writer, pkgName string, rpcTools []RPCToolData) error {

// Parse templates

baseTmpl, err := template.New("base").Funcs(getFuncMap()).Parse(baseMCPTemplate)

// ...

// Execute templates

if err := baseTmpl.Execute(w, map[string]interface{}{

"Package": pkgName,

"RPCTools": rpcTools,

}); err != nil {

return fmt.Errorf("error executing base template: %w", err)

}

// Process each tool

for _, tool := range rpcTools {

if err := toolTmpl.Execute(w, tool); err != nil {

return fmt.Errorf("error executing tool template for %s: %w", tool.ToolName, err)

}

}

return nil

}What This Means for RunReveal and our Customers

At RunReveal, we believe that AI tools like MCP Servers aren't about replacing humans — they're about reducing learning curves to adopting new products and enhancing productivity. By exposing our application's capabilities through MCP, we're making it dramatically easier to run investigations against your SaaS logs and do things like creating detections from those investigations seamlessly.

Our approach to building an MCP server has given us the flexibility to rapidly iterate on these capabilities while maintaining a clean, consistent codebase that leverages our existing API infrastructure and positions us to be able to support all the ways that people use the RunReveal product for the long term.

Want to get in touch with us? Fill out this form if you'd like to see a demo from one of our founders. If you'd like to keep up with what RunReveal releases next then drop your email below*. If you'd like to try the product then sign up now.

*runreveal is committed to protecting and respecting your privacy, and we’ll only use your personal information to administer your account and to provide the products and services you requested from us. From time to time, we would like to contact you about our products and services, as well as other content that may be of interest to you. You can unsubscribe from these communications at any time. For more information on how to unsubscribe, our privacy practices, and how we are committed to protecting and respecting your privacy, please review our Privacy Policy.